Intelligent Design: Constructing next generation data centers for the AI boom

With their massive energy needs, data centers will rely on a range of innovative technologies and construction methods

10 min read

Explore Trendscape

Our take on the interconnected global trends that are shaping the business climate for our clients.

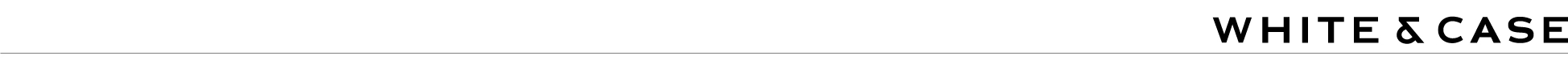

Data centers have long been the backbone of the digital economy, enabling everything from cloud storage to streaming services. However, rapid advancements in artificial intelligence (AI) are fueling an unprecedented surge in demand for the construction of new data centers. The design and architecture of this next generation of data centers will be shaped by the challenges related to the massive computational power required for AI applications, with innovative solutions fast evolving in areas such as advanced cooling technology, higher rack density specifications, energy efficiency and networking technologies. The rapid rise in demand for AI applications is also impacting construction methods, with modular construction methods rising in popularity. Considerations around footprint, power consumption and latency are also affecting the use of vertical construction and captive power solutions.

Heat and cooling driving design of AI data centers

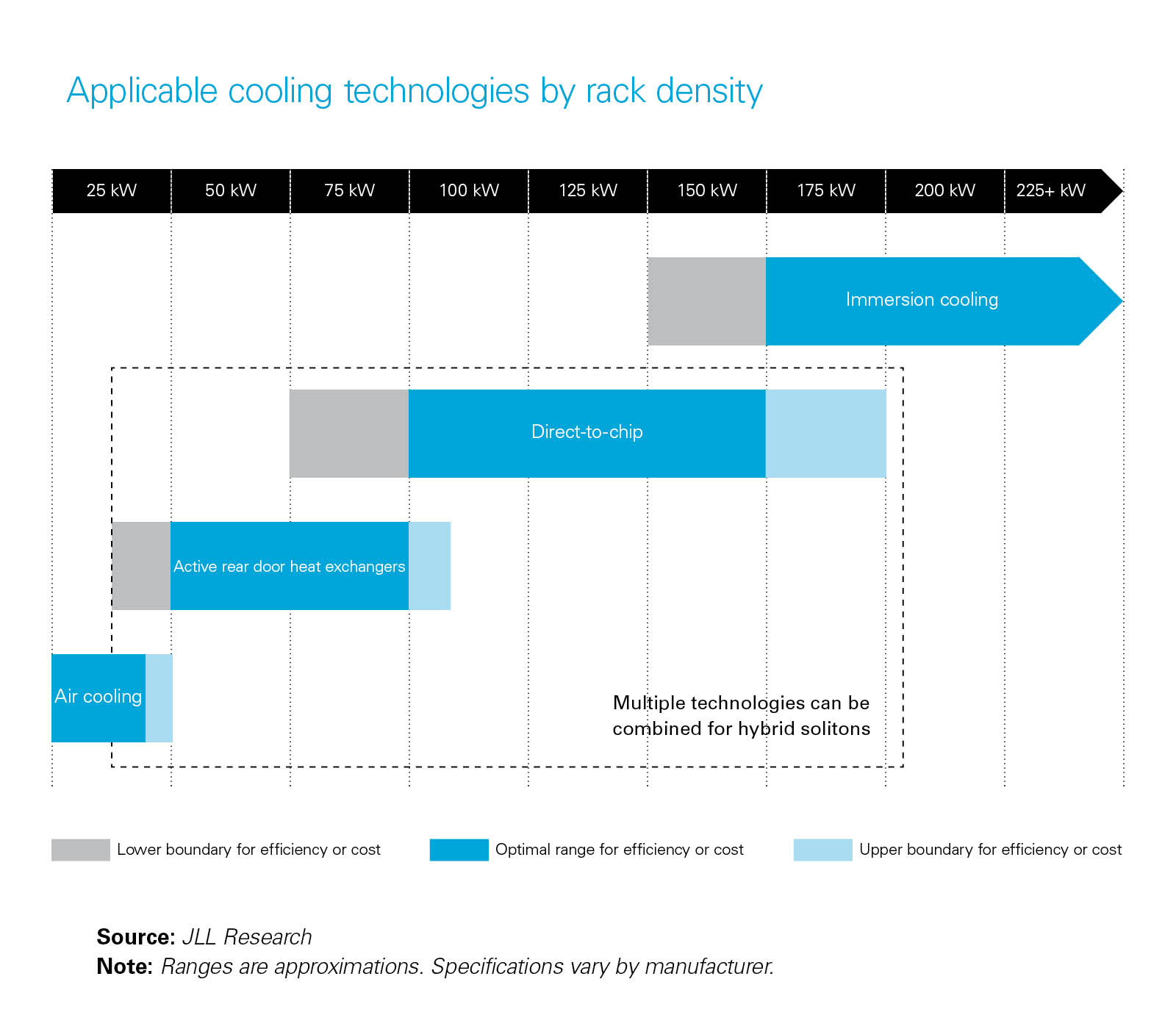

The high computing power required for AI applications means higher power densities for AI data centers, which create more heat than traditional data center servers. While traditional data centers have mainly used electricity powered air-cooling solutions to keep servers at an ambient temperature, the higher energy density and resulting heat output for AI data centers is driving innovation and changes in the design of cooling solutions for AI data centers.

Liquid cooling is increasingly emerging as a potential solution to the inefficiencies of cooling AI data centers with traditional air-cooling methods. The most common liquid cooling solution to date has been direct-to-chip (DTC) technology, where a liquid is used to directly cool the most power-intensive components of the server, such as graphics processing units (GPUs). Immersion cooling, where the entire server is immersed and cooled in a special non-conductive liquid (either through a single phase or dual-phase immersion process), is a potentially promising cooling solution for AI data centers. However, though under research and development for some years, the technology has had limited commercial deployment to date. Environmental and social (E&S) concerns and the phase out of "forever chemicals" used in the fluids may prevent immersion cooling from playing a major role in cooling AI data centers. Different cooling systems will be appropriate for different types of data centers and rack densities, and we recently explored in more detail key factors to consider when selecting a cooling method for data centers in the context of these evolving and emerging technologies.

The design for AI data centers can also reduce the heat and consequent cooling demands through less technical means, for example by increasing the server rack spacing and constructing data centers to benefit more from natural ventilation. Constructing data centers in colder climates can also reduce cooling requirements. The need for AI data centers could accelerate this trend, particularly as companies develop subterranean data centers in countries such as Iceland and Norway, which will have additional cooling efficiencies compared to the existing standard above ground digital infrastructure already well established across the Nordic region. Underground construction is however expensive and notoriously unpredictable (in terms of time and cost), with contractors prepared to take limited construction risk, particularly where shaft sinking and tunnel boring is required.

The excess heat from AI data centers does create a potential untapped opportunity for AI data centers and associated infrastructure to be designed and built to capture and use the heat from AI servers in district heating systems or other industrial applications. While in its infancy, this could ultimately become another commercial revenue stream for AI data centers, particularly when located near end users in colder climates, where there is a market for excess heat.

Going forward, innovations and efficiencies in AI models could also significantly reduce the high computing and energy demands facing the current generation of AI data centers, making heating and cooling a less challenging issue for new AI data centers.

Supply chain challenges

As with the wider construction industry, supply chain issues remain challenging for the data center industry, with AI data centers facing their own particular challenges.

The energy intensive nature of AI data centers means that they require additional mechanical and electrical (M&E) components compared to traditional data centers. As construction of AI data centers ramps up, this may result in longer lead times for certain equipment, particularly electrical components, where there are already high and competing demands on suppliers from several different sectors. Large data center developers and tech companies may advance-buy components to mitigate lead time issues. This can be a useful strategy for components that are reasonably interchangeable across projects. Buyers need to be conscious though of warranty erosion issues when there is a large time gap between equipment purchase and deployment, as well as the risk of being left with superseded or redundant equipment in the fast-moving hardware technology market.

There is also widely documented pressure on the quickly evolving high performance chip sector, which is key to AI data centers. Against the backdrop of the scramble to secure chips and rapidly improving chip technology, buyers have also been faced with difficult decisions as to whether to wait for next generation higher performing chips to be released, or to proceed with the purchase of chips available on the market. The right decision for any buyer will depend on their own intended use for the chips, as well as the time pressure they face to bring their data center to market.

For elements of the AI data center supply chain, such as immersion cooling and innovative network design solutions, there is a real opportunity for data center developers and major tech companies to partner and collaborate with startups and other small and medium enterprises to develop new solutions to overcome heating, power, cooling and network issues particular to AI data centers. Any such collaboration should be underpinned upfront by a clear joint venture agreement or collaboration agreement settling the rights, obligations and liabilities of each party, including importantly which party will own any intellectual property created, and whether, and if agreed how, either party may commercialize and use any developed technology/products.

Modular construction

Across the data center sector, modular construction is emerging as an increasingly viable and attractive alternative to traditional data center design and construction, offering scalability and efficiency. Modular construction involves using prefabricated modules that are shipped to site where they can be quickly installed, reducing construction time and onsite construction activities. The individual prefabricated modules come with different fit-outs, for example IT containers, power feed containers and logistics containers. For any AI data centers where speed of deployment is important and the AI end use relatively standard, this construction method has real potential. Modular construction also benefits from moving much of the construction work to centralized factories, limiting the need for skilled workers at the project site, which can be a real advantage for projects with remote locations or in markets with limited skilled workforce or licensed contractors.

Modular construction comes with its own considerations, which impact the procurement strategy, scope of work and contracts for a project.

- Interface with project site – developers must carry out detailed engineering and planning to ensure that the prefabricated units fit into and interface properly with the site layout, foundations and existing utility and network connections. If the module company offers a turnkey solution (including prefabrication, delivery and installation), then the interface risk between the various parts of the works will be generally mitigated. Otherwise for projects where separate logistics and installation contractors are used, the scope of each contractor will need to be clearly defined and the interface risk managed by the buyer.

- Logistics – reliable and skilled logistics partners are key to moving the prefabricated modules to site, with liability for damage or defects caused during transport to site often a contested area, particularly if units have complex and high value fit-outs.

- Customization – one of the main advantages of modular construction is that it allows for data centers to be constructed with efficiency and benefit from economies of scale. However, invariably many AI data centers will need some level of customization. So as not to lose the efficiencies and value offered by modular construction, developers must ensure that they balance the need for customization to meet specific client requirements, power densities, and cooling needs, against the benefits of standardization.

- Security and financing considerations – modular construction requires significant costs to be incurred prior to the modular units being delivered to site. This can create challenges for developers and their financiers where suppliers require large amounts of money to be paid prior to the modules being delivered to site and/or the developer having clear legal ownership of the modules. Available security arrangements to mitigate this risk vary depending on the jurisdictions of the site and the module fabrication location.

Vertical construction

The high computing power and rack spacing needs associated with AI data centers will require larger footprints for AI data centers, which in markets with constraints on land availability, is likely to result in more interest in vertical data centers. Constructing multi-story data centers is more capital intensive than traditional single story data center, particularly if additional piling and foundations are required to accommodate heavier AI related fit-outs and cooling systems, with economies of scale generally considered to be challenging above three or four stories. However, in markets with high real estate costs, and limited tolerance for high latency issues, more multi-story AI data centers can be expected.

Constructing behind-the-meter power infrastructure

Data centers have traditionally drawn their energy needs from the grid, however with the massive energy needs of AI data centers and grid connection and transmission network issues limiting the development of new data centers, more focus has turned to data centers constructing their own behind-the-meter energy generation and storage infrastructure. Constructing such captive power generation capability has high upfront capital costs, which combined with intermittency, technical and regulatory issues have generally limited these projects. However, AI data centers focused on end uses where high latency and even outages are less critical, such as research using supercomputing or training large language models, could present more viable opportunities for the development of captive intermittent green power solutions such as wind and solar, coupled with back-up lithium-ion battery energy storage systems. Constructing and operating such power infrastructure has its own construction and supply chain issues. Given such assets have not been the core business of data center developers and tech companies to date, this space is prime for collaboration between these companies and more traditional energy companies, including through the outsourcing of the power infrastructure assets through build-own-operate models.

Evolution of data center infrastructure to realize AI's potential

AI is reshaping the architecture of data center infrastructure, necessitating new ways to efficiently power, cool, host and generally support the next generation of computing. The announcement by AI start-up DeepSeek that it has developed an AI large language model using less computing power than existing AI models, also raises questions around whether a single data center architecture will be suited to all AI end uses, and whether high density computing data centers could become white elephants if AI technology evolves to models requiring less computing power. At what cost and how quickly physical digital infrastructure can be constructed to keep pace with the rapidly evolving AI technology will be a major determinant of whether and when the promise of the AI revolution can be realized.

White & Case means the international legal practice comprising White & Case LLP, a New York State registered limited liability partnership, White & Case LLP, a limited liability partnership incorporated under English law and all other affiliated partnerships, companies and entities.

This article is prepared for the general information of interested persons. It is not, and does not attempt to be, comprehensive in nature. Due to the general nature of its content, it should not be regarded as legal advice.

© 2025 White & Case LLP

View full image: Big Tech CapEx ($ billions) (PDF)

View full image: Big Tech CapEx ($ billions) (PDF)

View full image: Applicable cooling technologies by rack density (PDF)

View full image: Applicable cooling technologies by rack density (PDF)